Cybersecurity has always opened new green fields with the introduction of new technologies, from the rise of the internet, which enabled the founding of companies like McAfee, to the advent of cloud computing that paved the way for innovators like CrowdStrike. We stand at the brink of a new era in cybersecurity, driven by innovations in GenAI in the age of Intelligence. This blog covers the key cybersecurity areas that GenAI is poised to disrupt.

- Impact of GenAI on existing cybersecurity workflows

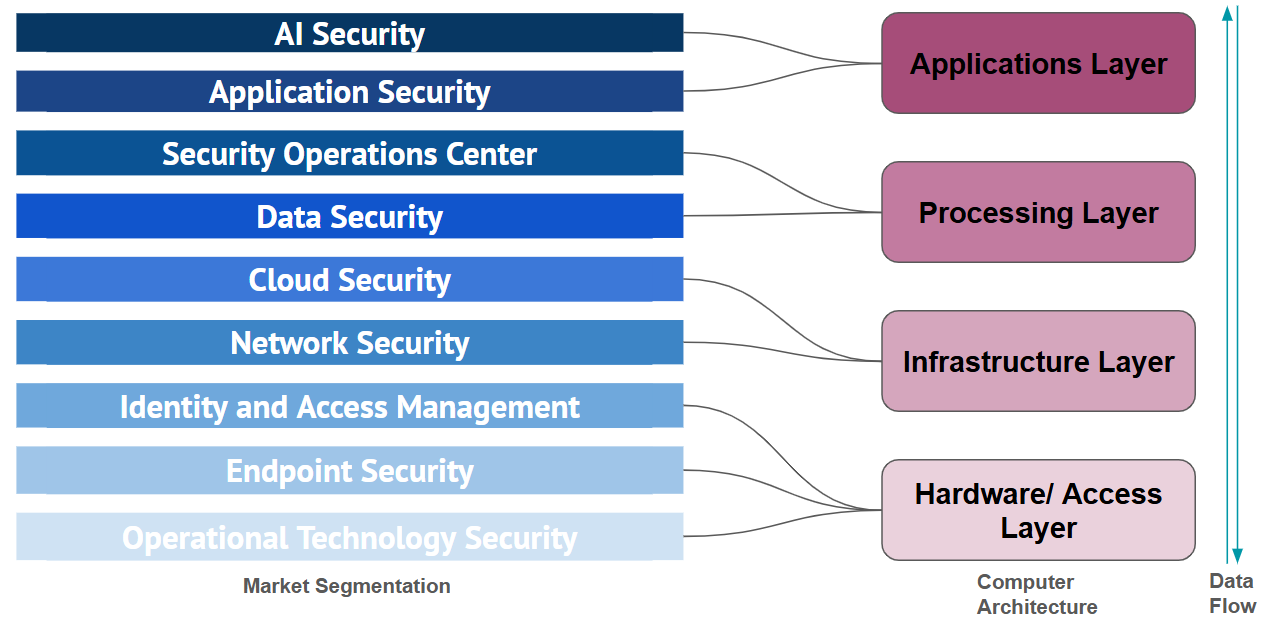

The cybersecurity ecosystem is filled with a wide array of tools and products for the seven different cybersecurity layers — such as network, application, and identity. On average, organisations rely on 40 to 60 distinct security solutions to protect their digital environments. It has been a constant cry from CISOs for the consolidation of tools, not just for ease of use, but also to detect attacks that cannot be detected by point solutions that look at only one surface area. Attackers can enter the network through a vulnerability in one area of the system to move across the system and cause havoc in another area. Hence, threats can move laterally across the security layer and remain undetected for months, if not days, before a large attack.

In early 2025, Marks & Spencer stores in the UK lost their ability to make digital payments, and customer payment data was exfiltrated (Source). The attackers gained access to their network through a third-party software vulnerability, which entered the network system and caused havoc to the Operational Technology systems. Consolidated security solutions would be able to detect such attacks across verticals due to access to data from different verticals. Due to the importance of consolidation, for incumbents like Proofpoint and Checkpoint, the strategy has been to acquire or build capabilities within the organisation. Notably, Palo Alto Networks has been strategically expanding its capabilities through acquisitions across various cybersecurity domains — including Protect AI for AI security (2025), IBM QRadar for SIEM (2024), and Dig Security for Data Security Posture Management (DSPM) in 2023.

Such approaches, however successful, still prove to be second to “best of breed” point solutions in detecting the specialised attacks. The ability to deliver both the precision of point solutions and the efficiency of tool consolidation is exactly where AI comes into play. AI agents have specialised fine-tuning for particular verticals and access to data and logs from different point solutions. These specialised agents can collaborate and orchestrate to find uncommon solutions across the stack. This enables CISOs to adopt their preferred “best-of-breed” solutions while integrating unified agents on top to streamline and consolidate security operations.

Shown below is the diagram of data flow between the different cybersecurity layers. The AI layer comes on top of the data of other layers.

- Security for AI and Agents

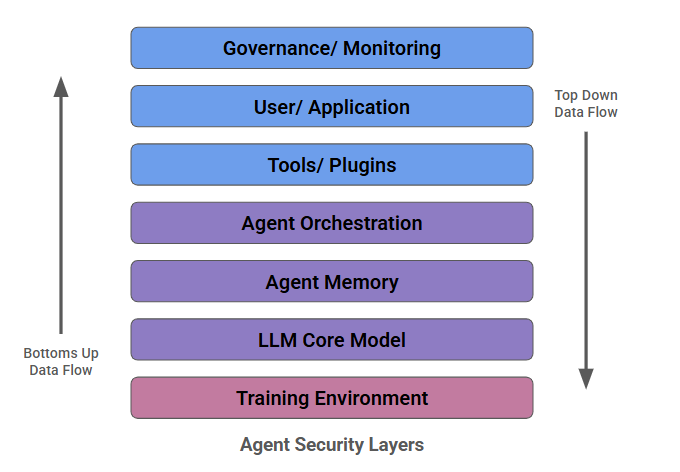

The proliferation of Generative AI and its use in enterprises and security operations is fundamentally reshaping the attack surface, creating entirely new vulnerabilities and avenues for exploitation that traditional security measures are not designed to address. The very architecture of GenAI systems, including the LLMs themselves, their training data, the APIs they interact with, and the agent-to-agent communication protocols, introduces novel risks. Since the launch of ChatGPT in 2022, prompt engineering attacks by both the public and adversaries have been the largest attack vector, aiming to make the LLM behave in unintended ways. Adversaries can target applications leveraging agentic AI and LLM models through various methods, but these attack strategies are broadly classified into two categories: top-down and bottom-up approaches. A top-down approach involves the adversary directly targeting the application that leverages agentic AI. In contrast, a bottom-up approach focuses on compromising the underlying data used to train the models or interfering with the agent development process.

As a result, various layers within the agentic architecture emerge as critical points of protection, as illustrated in the diagram below:

Adversaries can infiltrate the middle layers and move laterally across the various layers of the agent stack. Adversaries can exploit Shadow AI vulnerabilities—introduced by third-party AI applications used within organisations—as an initial entry point. From there, they can infiltrate the tooling layer and move laterally across other layers, mirroring the lateral movement typically seen in traditional seven-layer IT infrastructures. Startups are building solutions across this security layer. Lakera (founded in 2021, having raised nearly $30 million) solves for both the security of the training environment for LLMs and the models during deployment. Surepath - founded in 2023 and raised a total of $6.3M- focuses on the genAI tools/plugins and shadow AI layer.

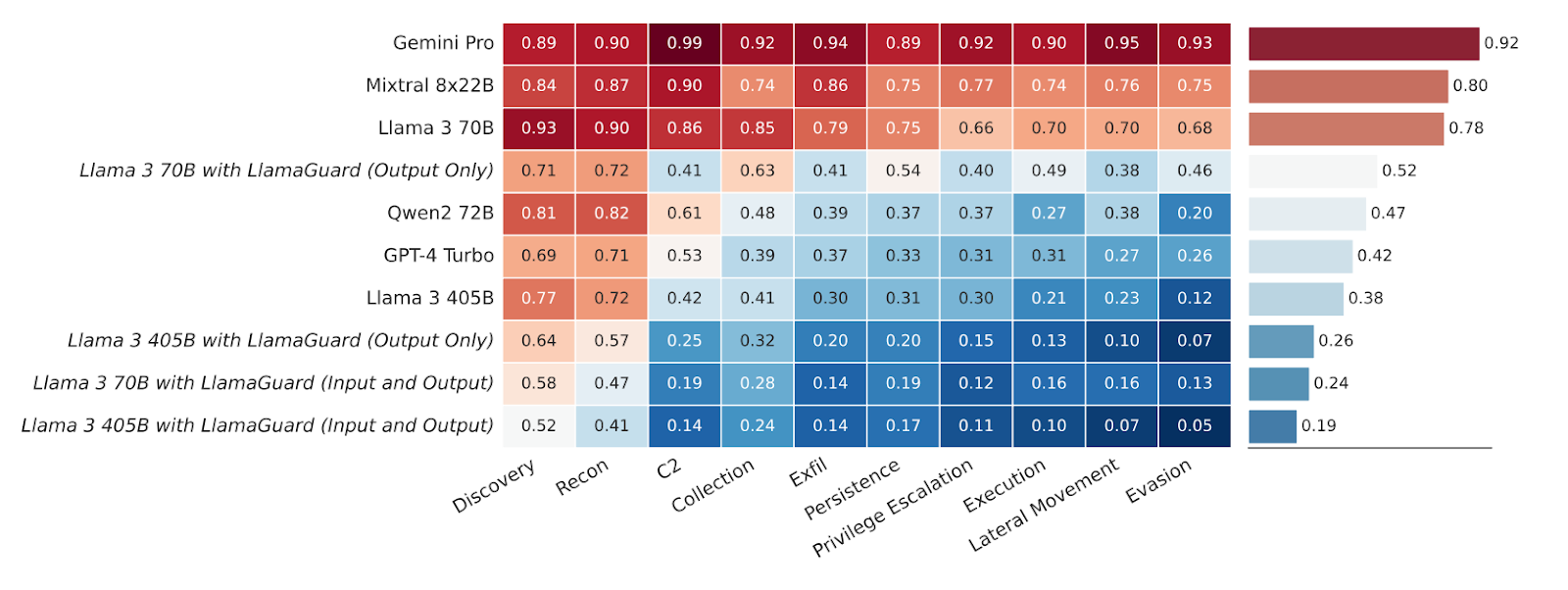

AI is not only enabling enterprises to defend themselves better, but it is also helping adversaries to develop a high volume of smarter attacks. This is giving rise to GenAI-driven threats. For example, from 2023, the number of phishing attacks using GenAI increased by 1760%, with nearly 70.8% of all attacks created using GenAI (Source). A study conducted by Meta revealed that the susceptibility of LLMs to comply with adversarial requests—such as generating sophisticated attacks— is highly likely and varies based on their underlying training architecture. These models have provided adversaries with a means to jailbreak and build GPT models specifically for cybersecurity attacks. We are increasingly seeing ransomware-as-a-service (RaaS) being offered on the dark web, utilising GPT models like WormGPT and FraudGPT, which can launch malware at scale. Below is the result of the study for the different models and their compliance with the different MITRE ATT&CK categories.

Fig: Cyber attack helpfulness compliance rates by model and MITRE ATT&CK category, also showing LlamaGuard 3’s effect on compliance rates when used to guardrail Llama 3 models. Higher rates indicate less secure behaviour. (Source)

Agents are increasingly interacting with enterprise tools and software with far greater agency. Given that agents have demonstrated a lack of compliance with security standards, there is a growing need to enforce strict authentication and authorisation controls on the tools and systems they interact with.

- Rethinking IAM in the Intelligence Era

Due to the level of access to tools, memory, and software that agents are given, it becomes imperative to build better authentication systems for agents. And an IAM that can differentiate between AI and humans.

A simple issue with giving complete access to agents is that they might just do the exact opposite of what you prompted them to, simply due to its probabilistic nature. Hence, every API key, service account, software bot, microservice, IoT device, and LLM-powered agent should be considered a distinct identity with its own set of permissions, access rights, and security requirements. This would allow organisations to track, limit access, and control agents. These non-human identities (NHIs) are increasingly performing critical functions, accessing sensitive data, and interacting with other systems autonomously.

IAM for NHI, humans, and protection from bots provides a winner-takes-all market. Especially considering that, as of 2025, frameworks and regulatory guidelines for agents remain undefined. The evidence suggests this consolidation is already beginning. Astrix Security recently raised $45 million in Series B funding, while established players like CyberArk are positioning their identity security platforms as comprehensive solutions for human, machine, and AI identities. The companies that establish dominant positions in agent security today will likely maintain those advantages throughout the broader agent adoption cycle. European AI Act and similar frameworks worldwide are in the process of creating compliance requirements that will mandate sophisticated agent identity management within specific timeframes.

Companies that build strong agent identity platforms, secure early enterprise adoption, and establish network effects will gain a lasting advantage—while those that delay risk falling permanently behind in what could be the most transformative cybersecurity technology shift since the rise of cloud computing.

The window for establishing market leadership in GenAI cybersecurity is both enormous and urgent. The demand for sophisticated AI security solutions is immediate and non-negotiable. Companies that develop comprehensive GenAI security platforms, establish early enterprise relationships, and build network effects around their solutions will likely capture disproportionate value as the market matures. We are witnessing the birth of an entirely new cybersecurity paradigm, where artificial intelligence serves as both the primary threat vector and the ultimate defence mechanism. The intelligence era demands intelligent security, and those who deliver it will define the future of digital protection.

The intelligence era presents both challenges and opportunities in the large cybersecurity landscape, which is ripe for change. If you are building something exciting in this domain, we'd love to hear from you. Reach out to us at info@specialeinvest.com or connect with me directly at pramoth.arun@specialeinvest.com.

To know more about Speciale’s investments in disruptive technologies, please check our portfolio.